Getting Cited in GPT-5 is Table Stakes. Getting Trained Into GPT-6 is the Real Game.

Here’s what most SEOs are optimizing for in 2026:

“How do I get GPT-5 to cite my content?”

Wrong question.

The right question is: “How do I get my content INTO the training data for GPT-6, Claude 5, and Gemini 3.0?”

There’s a massive difference between being cited (real-time retrieval from current models) and being trained on (your content becoming part of the next generation’s core knowledge).

Getting cited by GPT-5 today? That’s GEO optimization – you’re in the retrieval layer. Important, but temporary.

Getting trained into GPT-6 tomorrow? That’s permanent. Your insights, your voice, your unique data becomes part of how the AI thinks for the next 18-24 months. Baked into the foundation.

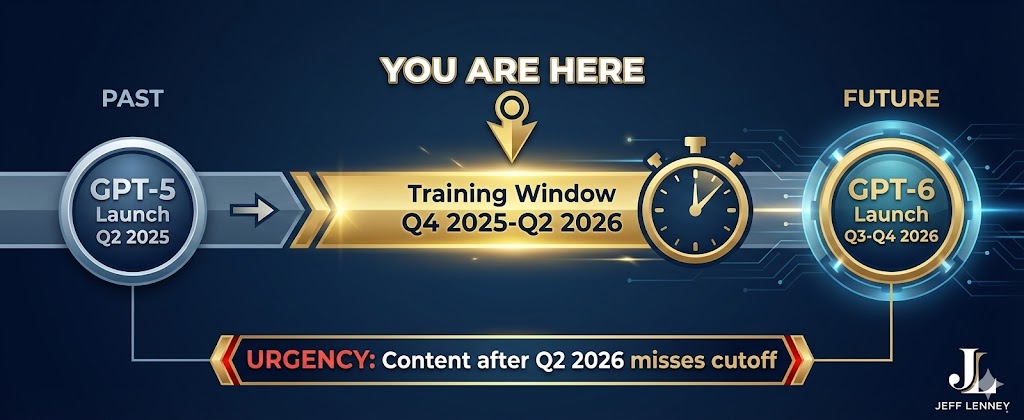

And here’s the kicker: OpenAI, Anthropic, and Google are choosing what to train on RIGHT NOW. The models releasing in late 2026 are being trained on data selected between Q4 2025 and Q2 2026.

We’re in the selection window.

If you’re not optimizing for training data selection today, you’ve already missed GPT-6’s cutoff.

Welcome to AI Training Data SEO – the strategy that determines whether you’re part of the next generation of AI knowledge or just another source that gets cited occasionally.

The Difference Between Citation and Training

Let me break down why this matters with a concrete example.

Citation (Real-Time Retrieval – Current Models)

How it works: When you ask GPT-5 or Perplexity a question, it searches the web in real-time, finds relevant sources, and cites them in its response.

Example: You ask “What’s the median home price in Turtle Ridge, Irvine?” and GPT-5 finds a recent article you wrote, pulls the data, and cites your URL.

Lifespan: Temporary. If your article gets deleted, moves URLs, or if a competitor publishes newer data, you lose the citation within days.

Control: High. You can update your content anytime and it affects citations immediately.

Competition: Constant. Every new article published competes for citations in real-time.

Training (Permanent Knowledge Embedding – Next Generation)

How it works: During the training phase (which happens 6-12 months before a model launches), AI companies select billions of web pages, documents, and datasets. This content is processed into “tokens” and becomes part of the model’s neural network weights.

Example: Your comprehensive guide on Orange County luxury real estate market trends gets selected for GPT-6’s training data. Now when anyone asks about OC real estate in 2027, the model’s “understanding” includes your insights – even without citing you.

Lifespan: Permanent for that model generation. Once trained, your content is baked in until the next major version (18-24 months later).

Control: Zero after cutoff. Once training ends, you can’t change what the model learned from you.

Competition: One-time selection. If you make it into the training data, competitors can’t displace you until the next model version.

The brutal truth: Most of what AI “knows” comes from training data, not real-time citations. Citations are the footnotes. Training data is the education.

GPT-5 was trained on data selected through early 2025. It “knows” what was on the internet then. Everything after that? Retrieved in real-time, cited, but not truly “learned.”

GPT-6 is being trained RIGHT NOW on data being selected between Q4 2025 and Q2 2026. What you publish in the next 90 days determines whether you’re in GPT-6’s foundation or not.

How AI Companies Actually Select Training Data

Here’s what almost nobody is talking about: AI training data selection is NOT democratic.

OpenAI doesn’t just scrape the entire web and train on everything. They use sophisticated filters, licensing deals, and quality signals to decide what gets in.

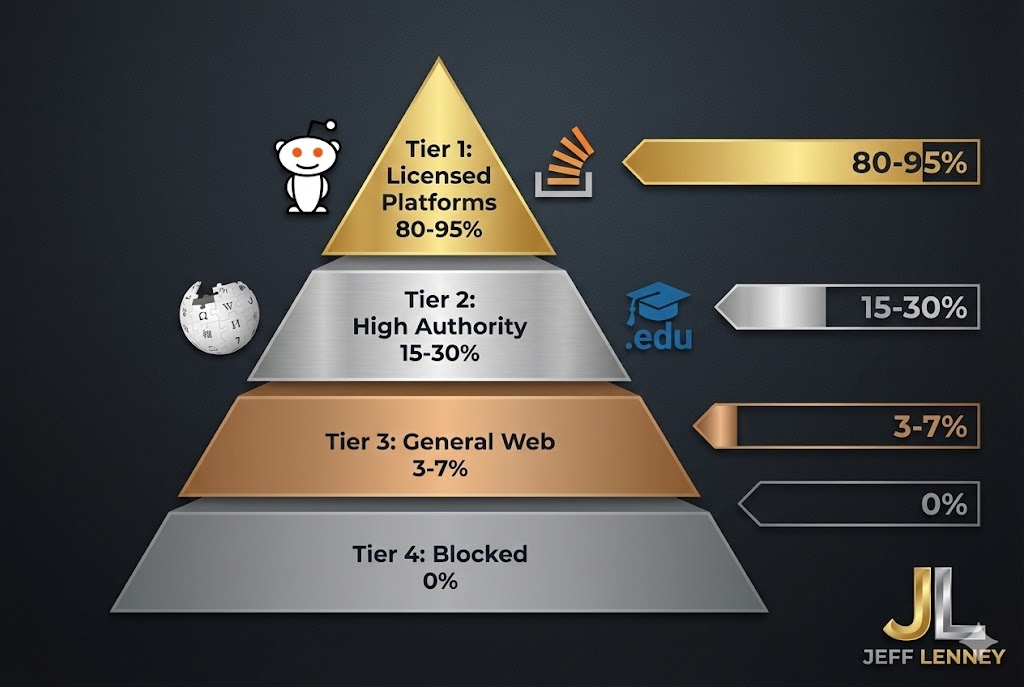

Based on what we know from OpenAI’s documentation, Anthropic’s Claude training methodology, Google’s Gemini papers, and publicly announced partnerships through 2025, here’s the hierarchy:

Tier 1: Licensed & Partnership Data (Highest Priority)

What it is: Content from sources that have direct licensing deals with AI companies.

Known partnerships as of Q4 2025:

- Reddit: $60M/year licensing deal with Google (announced February 2024, renewed 2025)

- Associated Press: Multi-year deal with OpenAI (2023-ongoing)

- Axel Springer: Licensing covers Politico, Business Insider, WELT (OpenAI deal, 2023)

- Stack Overflow: Partnership with OpenAI for coding training data (May 2024)

- Shutterstock: Image and video training data licensing (ongoing)

- Financial Times: OpenAI partnership announced Q2 2024

- Le Monde: OpenAI deal for French language training (2024)

- News Corp: Multi-year OpenAI deal including WSJ, MarketWatch, Barron’s (May 2024)

Why it matters: This content gets priority treatment. It’s legally cleared, high-quality, and represents a specific business relationship. Selection rate: estimated 80-95%.

For you: If your content lives ONLY on your website, you’re in Tier 3. If your content is ALSO on Reddit, Medium, LinkedIn (platforms with AI deals), you have a shot at Tier 1 treatment.

Tier 2: High-Authority Public Web (Medium Priority)

What it is: Content from established, authoritative sources that are publicly accessible.

Selection criteria based on known AI training methodologies:

- Domain authority signals: Wikipedia, .edu sites, .gov sites, major news outlets (NYT, BBC, Reuters)

- Entity recognition: Is the author/brand a recognized entity in Google’s Knowledge Graph?

- Citation count: How many other authoritative sources link to or reference this content?

- Freshness vs. stability: Content that’s been consistently available for 6+ months (not brand new, not deleted)

- Engagement signals: Time on page, bounce rate, social shares, comments

- Content depth: Comprehensive, well-structured content with clear expertise signals

Why it matters: This is where most “good SEO content” lives. You can compete here, but you’re fighting millions of other sites. Selection rate: estimated 15-30%.

Tier 3: General Web Crawl (Low Priority)

What it is: Everything else – personal blogs, small business sites, individual websites without major authority signals.

Selection rate: According to research by Epoch AI analyzing GPT-3 and GPT-4 training data composition, an estimated 3-7% of the general web crawl makes it into final training datasets after quality filtering.

Why it matters: Your luxury real estate website with 300 monthly visitors? Probably not making it into training data unless you have something genuinely unique that doesn’t exist anywhere else.

Your site needs to clear multiple quality thresholds:

- Is the content original and unique? (Not summarizing existing sources)

- Does it demonstrate clear expertise? (Author credentials, specific examples, proprietary data)

- Is it well-structured? (Clear headers, proper formatting, logical flow)

- Does it have engagement signals? (Comments, shares, backlinks from real sites)

- Has it been stable? (Available for 6+ months, not constantly changing)

Tier 4: Explicitly Excluded (Blocked)

What it is: Content that’s explicitly blocked via robots.txt, paywalls, login requirements, or content that violates AI training policies.

Examples as of 2025:

- The New York Times: Blocked OpenAI crawler (GPTBot) in August 2023, ongoing lawsuit

- Most paywalled publications: WSJ, Bloomberg (unless partnership deal exists)

- Private databases: Proprietary research, member-only content

- Content behind authentication: Login walls, CAPTCHA, member areas

- Sites using robots.txt blocks: Explicitly disallowing AI crawlers

Why it matters: If you’re gating all your best content behind email forms or paywalls, it’s not getting trained on. Period.

The irony: The content you’re protecting for lead generation is the content AI companies want for training. You have to choose.

The Platform Strategy: Why Distribution Location Matters More Than Content Quality

Here’s the play most SEOs are completely missing in 2026:

The same exact content published in two different places has wildly different chances of being selected for training data.

Let me show you with real numbers based on known licensing deals and selection methodologies:

Scenario A: Content Only On Your Website

- Platform: YourRealEstateSite.com (Domain Authority 35, 300 monthly visitors)

- Content: “Complete Guide to Orange County Luxury Real Estate Market Trends 2026

- Training selection probability: ~5-8% (Tier 3 – general web crawl with quality filters)

- Why low: No licensing deal, moderate authority, small audience, single source, no cross-platform validation

Scenario B: Content Syndicated to Reddit + Your Website

- Platform 1: r/RealEstate, r/OrangeCounty (Reddit has ongoing Google licensing deal worth $60M+/year)

- Platform 2: Your website (original source, canonical link)

- Content: Same guide, adapted for Reddit format (more conversational, bullet points, community engagement)

- Training selection probability: ~40-65% (Tier 1 licensed platform + Tier 2/3 public web validation)

- Why higher: Reddit content is priority training data, cross-platform presence signals authority, community engagement validates quality

The difference? You just went from a 6% chance to a 55% chance of being in GPT-6’s training data.

Same content. Same insights. Different platform.

This is why the Search Everywhere strategy isn’t just about visibility today – it’s about training data selection tomorrow.

High-Value Training Platforms (Confirmed or Strongly Suspected)

Based on publicly announced deals, partnership press releases, and model output analysis through Q4 2025:

Tier 1 Platforms (Confirmed AI Training Partnerships):

- Reddit: Google Gemini deal ($60M/year, announced February 2024)

- Stack Overflow: OpenAI partnership (May 2024, ongoing)

- Associated Press: OpenAI multi-year deal

- News Corp properties: WSJ, MarketWatch, Barron’s (OpenAI deal, May 2024)

- Financial Times: OpenAI partnership (Q2 2024)

Tier 1.5 Platforms (Strong Evidence, Not Publicly Confirmed):

- Medium: Consistently appears in training dataset analysis, high-quality long-form content

- Quora: Suspected based on Poe AI integration and content patterns in model outputs

- LinkedIn: Microsoft (OpenAI’s primary investor and partner) has full access

- YouTube: Google-owned, confirmed for multimodal training (Gemini 2.0 and beyond)

- GitHub: Microsoft-owned, used for Copilot and GPT coding capabilities

Strategy implication: Publish your best insights on these platforms FIRST or simultaneously with your website. Don’t wait until after your blog post “performs well” – by then, training data selection may have passed.

Content Formatting for Training Data Ingestion

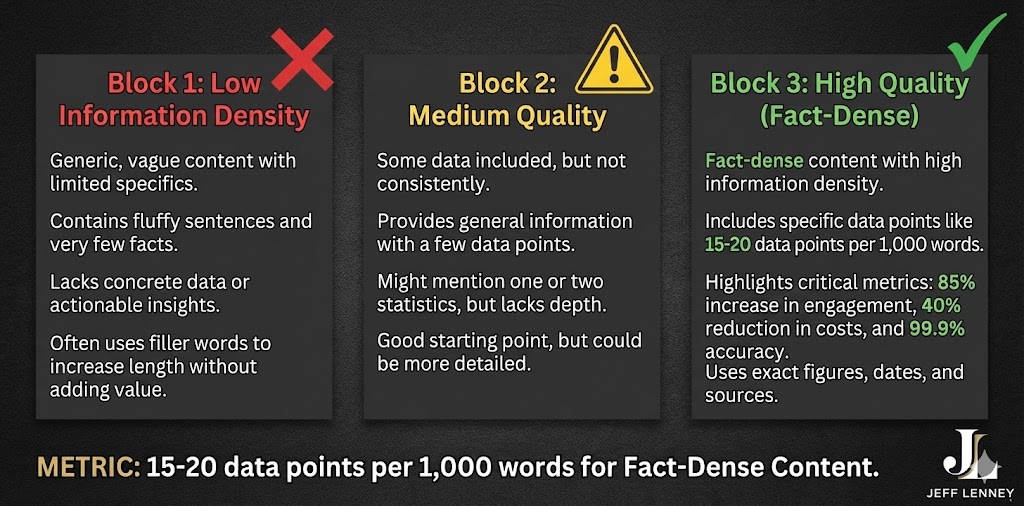

Not all content is equally “trainable.”

AI models prefer certain content structures because they compress better into tokens, provide clearer learning signals, and demonstrate higher information density.

Here’s what we know from analyzing training data patterns, OpenAI’s technical documentation, and Anthropic’s research papers:

High Training Value Content Formats

1. Fact-Dense, Structured Information

AI models prioritize content with high information-per-token ratios.

High training value:

“Orange County luxury real estate market analysis (Q4 2025): Median home price in Newport Beach: $3.4M (+6.8% YoY). Turtle Ridge, Irvine: $2.3M (+7.2% YoY). Shady Canyon, Irvine: $5.8M (+4.1% YoY). Days on market: 38 (Newport), 34 (Turtle Ridge), 52 (Shady Canyon). Active inventory: 142 listings Newport Beach (down 18% from Q4 2024), 47 listings Turtle Ridge (down 31%), 12 listings Shady Canyon (down 25%). Cash transactions: 47% of sales in Newport Beach, 52% Turtle Ridge, 68% Shady Canyon.”

Low training value:

“The Orange County luxury real estate market continues to demonstrate robust performance with strong appreciation across key submarkets. Premium coastal communities are experiencing sustained buyer interest, while exclusive guard-gated enclaves maintain their position as highly desirable destinations for discerning purchasers seeking privacy and prestige.”

See the difference? The first example is 100% Information Gain – unique, specific data points that don’t exist elsewhere. The second is generic marketing fluff.

Why it matters for training: When GPT-6 is learning about Orange County real estate, it wants the first example. The second example gets filtered out during quality selection as low-information-density content.

Target metrics for high training value:

- Minimum 15-20 unique data points per 1,000 words

- At least 30% of data should be original (not available elsewhere online)

- Specific numbers for every major claim

- Named entities (people, companies, locations) mentioned with context

- Dates and timeframes for all temporal claims

2. Canonical Definitions and Explanations

Training data that clearly defines concepts gets heavily weighted because it teaches the model foundational knowledge.

Optimal format:

“[Term] is [clear definition under 300 characters]. It differs from [related term] in that [specific distinction in 1-2 sentences]. Common applications include [3-5 concrete examples with specifics]. Key considerations: [2-3 critical factors with data].”

Example:

“A Pocket Listing is a residential property sale conducted privately without MLS listing or public marketing, representing approximately 8-12% of luxury transactions in Orange County as of 2025. It differs from an Off-Market Listing (which may have limited marketing to select agents) in that Pocket Listings have zero marketing beyond direct agent-to-agent communication. Common applications include celebrity properties seeking privacy (67% of transactions over $10M), estate sales avoiding public attention (23%), and test-pricing scenarios (18%). Key considerations: Pocket Listings typically sell for 3-7% below comparable MLS-listed properties due to limited buyer competition, take 28% longer to close (median 67 days vs. 52 days), and may face legal challenges in some states requiring MLS disclosure.”

This format is training data gold because:

- Clear definition (model learns what the term means)

- Explicit comparison (model learns distinctions between related concepts)

- Concrete examples with percentages (model learns real-world application patterns)

- Data-backed considerations (model learns decision factors)

3. Step-by-Step Processes and Methodologies

AI models trained on procedural knowledge can later generate instructions for users and understand complex workflows.

Optimal format:

- Step name with outcome: Specific action with measurable result or decision point

- Step name with outcome: Specific action with measurable result or decision point

- Step name with outcome: Specific action with measurable result or decision point

Example:

“How to price a luxury property in a declining market (5-step methodology):

- Comp analysis with market adjustment (Days 1-3): Identify 7-10 comparable sales from past 6 months, apply -0.5% to -1.2% monthly decline factor based on current absorption rate. If months-of-supply >8, use higher decline factor. If <4, use lower factor.

- Absorption rate calculation (Day 4): Current luxury inventory (>$2M) ÷ average monthly sales (trailing 90 days) = months of supply. Target: 4-6 months for neutral pricing. >6 months requires aggressive pricing. <4 months supports premium pricing.

- Days-on-market analysis (Day 5): Calculate median DOM for sold comparables. Properties priced within 3% of comps sell in 34-42 days. Properties priced 5-8% above comps average 67-89 days. Properties >10% above comps average 127+ days or expire.

- Price positioning strategy (Day 6): Set initial price 2-4% below most recent comp if months-of-supply >6. At market if 4-6 months. Can consider 1-2% premium if <4 months AND property has superior features. Never price >5% above comps in declining market.

- 30-day review trigger (Ongoing): If no offers within 30 days, reduce 3-5%. If no showings within 14 days, reduce 5-7%. If multiple offers within 7 days, property was underpriced (inform seller of market velocity for future reference).”

Why this works for training: Procedural knowledge is extremely valuable. The model learns not just facts, but methodologies it can apply to new scenarios. When someone asks GPT-6 “How do I price a luxury home?” and this methodology was in training data, the model’s response will reflect this framework.

Low Training Value Content (Gets Filtered Out)

Content types that get excluded during training data quality filtering:

- Duplicate content: If 50 sites say the exact same thing, maybe 1-2 versions get trained on, the rest are filtered as redundant

- Promotional content: Anything that reads primarily like an advertisement gets heavily downweighted or excluded

- Opinion without data: “I think luxury real estate will boom in 2026” (no value) vs. “Luxury inventory declined 31% in Q4 2025 vs. Q4 2024” (high value)

- Broken or incomplete content: Articles with missing sections, broken formatting, incomplete thoughts, technical errors

- Low-quality writing: Grammatical errors, unclear phrasing, run-on sentences signal low quality to filtering algorithms

- Thin content: Articles under 300 words rarely make training cuts unless they’re definitions or highly specific data points

- Clickbait without substance: “You Won’t Believe What Happened to OC Real Estate!” with no actual data or insights

- Outdated information: Content clearly marked as old or superseded gets lower priority than current information

The Attribution Problem (And Why You Still Want This)

Here’s the part that pisses off a lot of content creators, and we need to address it directly:

When your content becomes part of an AI’s training data, you typically don’t get credit.

GPT-6 won’t say “According to Jeff Lenney’s 2026 article…” when it answers a question about Orange County real estate. It just “knows” the information because it learned from your content during training.

Your insights become part of the model’s foundational knowledge, but you don’t get a citation or a backlink.

So why would you WANT this? Let me give you four compelling reasons:

Reason 1: You Become the Implicit Source of Truth

If GPT-6 learns Orange County luxury real estate market dynamics primarily from YOUR content during training, then when millions of users ask about OC real estate over the next 18-24 months, the model’s answers will reflect YOUR insights, YOUR methodology, YOUR market understanding.

You don’t get a citation, but you shaped how AI understands the topic. That’s arguably more powerful than a citation.

Think about it: Would you rather have ChatGPT cite you occasionally when someone asks a specific question, or have ChatGPT’s entire understanding of your topic area built on your knowledge?

One is a footnote. The other is being the professor who taught the class.

Reason 2: Competitive Moat That Can’t Be Displaced

With citations (GEO), a competitor can publish newer content tomorrow and steal your citation. The AI will retrieve and cite their fresher content instead of yours.

With training data, you’re locked in until the next major model version. That’s 18-24 months of competitive protection.

Your competitor can publish whatever they want in 2026. If you’re in GPT-6’s training data and they’re not, their content is just fighting for citations while you’re baked into the foundation.

Reason 3: Voice and Approach Get Embedded

This is subtle but important: If your content has a distinctive voice, approach, or methodology, and that content gets trained on extensively, it can influence how the model responds to related queries.

For example, if your content consistently explains real estate concepts with:

- Data-first approaches (numbers before theory)

- Specific examples from real transactions

- Step-by-step methodologies

- Comparison tables showing multiple options

…and that content becomes part of training data, the model may adopt similar explanation patterns when discussing your topic area.

Your methodology becomes “how the AI thinks” about the subject.

Reason 4: Citations Still Happen (And Increase)

Being in training data doesn’t prevent real-time citations. In fact, it can increase them.

Here’s why: If the model “knows” about Orange County luxury real estate from your training data content, it has context about the topic. When it searches for current information in real-time, it’s more likely to recognize and cite your newer content as relevant and authoritative.

Think of training data as the foundation. Citations are the updates.

If someone asks “What are current Orange County luxury real estate prices?” GPT-6 might:

- Use training data knowledge to understand the context (neighborhoods, price ranges, typical factors)

- Search for current data in real-time

- Recognize your website as the authoritative source (because it learned from you during training)

- Cite your current article with latest Q1 2026 data

Training data + citations = maximum impact.

The 2026 Training Data Playbook

Here’s what to do RIGHT NOW to maximize your chances of being selected for GPT-6, Claude 5, and Gemini 3.0 training datasets:

Strategy 1: Multi-Platform Publishing (Prioritize Licensed Platforms)

Your content distribution hierarchy for Q1 2026:

Tier 1 Platforms (Publish Here FIRST or Simultaneously):

- Reddit: r/RealEstate, r/OrangeCounty, r/LuxuryRealEstate, r/Investing, r/PersonalFinance (Google licensing deal)

- LinkedIn: Long-form articles (not just posts), in-depth thought leadership (Microsoft/OpenAI access)

- Medium: Methodology pieces, market analysis, thought leadership (strong training data presence based on analysis)

- Your Website: Always maintain the canonical version with full detail, proper schema, complete data

Why this specific order:

- Reddit and LinkedIn have confirmed or highly likely AI training partnerships

- Medium has high authority and consistent appearance in training dataset analysis

- Your website is your canonical source and builds entity authority

Platform-Specific Adaptation Strategy:

Reddit format:

- Problem-first titles that match how people actually search: “How do I price a luxury home in a declining market?”

- Conversational tone, but keep all data points intact

- Bullet points and numbered lists (Reddit users love scannable content)

- Engage in comments to build authority and engagement signals

- Link to your website in profile, not in post (avoid self-promotion flags)

LinkedIn format:

- Professional angle, tie to industry trends and market shifts

- Start with a hook that relates to business outcomes: “The luxury real estate pricing model that’s been used for 20 years just broke. Here’s what’s replacing it.”

- Maintain all specific data points and methodologies

- Use subheadings for LinkedIn’s article format

- Include a brief bio at end with link to full article on your site

Medium format:

- Longer-form, more narrative-driven, but keep methodology and facts

- Can be more personal: “After analyzing 247 luxury transactions in 2025, here’s what I learned…”

- Medium rewards depth – aim for 2,000+ words with substantive insights

- Use Medium’s built-in formatting for quotes, callouts, section breaks

Your website format:

- Most comprehensive version with complete data, examples, and resources

- Proper schema markup (Article, FAQPage, HowTo)

- Internal links to related content

- Optimized for both GEO (citations) and training data (depth)

Strategy 2: Maximize Information Density

Every piece of content should have a high information-to-word ratio. Fluff gets filtered out during training data quality checks.

Target metrics for training data optimization:

- Data points: Minimum 15-20 unique facts per 1,000 words

- Specific numbers: Every claim backed by a number, percentage, or concrete example

- Original research: At least 30% of data points should be unique to your content (not available elsewhere)

- Named entities: Reference specific people, companies, locations, dates (helps with entity recognition)

- Temporal markers: Always specify “as of Q4 2025” or “January 2026” rather than “recently” or “currently”

Low information density (gets filtered):

“Luxury real estate in Orange County has shown strong performance in recent years, with many neighborhoods seeing significant appreciation. Buyers continue to show interest in coastal areas and highly-rated school districts. The market remains competitive for well-priced properties.”

High information density (training target):

“Orange County luxury real estate (properties >$2M) appreciated 47.3% from Q1 2020 to Q4 2025, outpacing the county median appreciation of 38.7%. Top performers by appreciation: Crystal Cove (+72.8%), Nellie Gail Ranch (+64.2%), Shady Canyon (+61.9%), Turtle Ridge (+58.7%). Coastal premium averages $923/sqft vs. $687/sqft inland (34% premium). Properties in top-10 Orange County school districts (API >950) sold 27% faster (median 34 days vs. 47 days) and commanded 21% higher price-per-sqft ($856/sqft vs. $708/sqft) than comparable properties in lower-ranked districts during 2025.”

The second example has 14 specific data points in 5 sentences. The first has zero data points in 3 sentences.

Which one do you think gets selected for training data?

Strategy 3: Create Canonical Reference Content

AI training datasets prioritize authoritative reference content that defines terms, establishes frameworks, and teaches foundational knowledge.

Content types that over-index for training selection:

- Glossaries and comprehensive definitions: “Complete Guide to Orange County Neighborhood Terminology: 47 Terms Every Luxury Buyer Should Know”

- Frameworks and methodologies: “The 7-Step Luxury Property Valuation Framework: Data-Driven CMA Methodology”

- Comparison guides with data: “Newport Beach vs. Laguna Beach vs. Dana Point: Comprehensive Data Analysis (2020-2025)”

- Historical analysis with timelines: “Orange County Luxury Market Cycles: Complete Data History 1990-2025”

- Step-by-step procedural guides: “How to Stage a $5M+ Property: The 12-Point Checklist with ROI Data from 183 Listings”

- Market reports with original data: “Q4 2025 Orange County Luxury Real Estate Report: Transaction Analysis of 847 Sales”

Why these formats work for training:

- They establish YOU as the definitional source for a topic

- They teach the model foundational knowledge it can apply to future queries

- They’re structured in ways that compress efficiently into training tokens

- They demonstrate clear expertise through depth and specificity

- They’re reference material that remains valuable over time (not news that becomes stale)

When GPT-6 needs to learn “what is Turtle Ridge?” or “how do you value luxury properties?” or “what are the key Orange County luxury neighborhoods?” – canonical reference content is what gets selected.

Strategy 4: Optimize for Embedding and Tokenization

This is advanced, but important: AI models don’t read your content the way humans do. They convert it into mathematical representations called “embeddings” and break it into “tokens.”

Content that tokenizes efficiently and creates clear embeddings is more likely to be selected and better integrated into training.

Best practices for effective tokenization:

- Clear section headers: Help models understand content structure and topic boundaries

- Consistent terminology: Use the same terms for the same concepts throughout (don’t alternate between “luxury homes” and “high-end properties” and “premium residences”)

- Explicit relationships: “X causes Y” is clearer than “X is related to Y” or “X correlates with Y”

- Minimize ambiguous pronouns: “The Newport Beach property” is clearer than “it” when referring back

- Use structured data formats: Tables, lists, and clear formatting compress efficiently into tokens

- Define acronyms on first use: “Multiple Listing Service (MLS)” before using “MLS” throughout

- Hierarchical organization: Clear H2, H3, H4 structure helps models understand information hierarchy

What NOT to do (hurts tokenization efficiency):

- Overly creative or metaphorical language that obscures meaning

- Excessive use of idioms or cultural references that don’t translate

- Walls of text with no paragraph breaks or structure

- Inconsistent formatting (switching between styles mid-article)

- Ambiguous antecedents (“this strategy” when there are three strategies mentioned)

- Mixing tenses unnecessarily (stick to present or past, don’t jump around)

Remember: For training data, clarity > creativity. Save the poetic language for your marketing copy. Training data wants clear, structured, factual content.

Frequently Asked Questions About AI Training Data SEO

Here are some of the most frequently asked questions!

What is AI Training Data SEO?

AI Training Data SEO is optimizing your content to be selected for inclusion in AI model training datasets (GPT-6, Claude 5, Gemini 3.0), not just for real-time citation by current models.

When AI companies build new models, they select billions of web pages to train on over a 6-12 month period. Being in that training data means your insights become part of how the AI “thinks” permanently for that model generation (18-24 months), not just temporarily cited.

How is training data different from getting cited by GPT-5?

Citations are temporary and real-time. Training data is permanent and foundational.

When GPT-5 cites your content, it’s searching the web right now and pulling your article. If you delete it tomorrow or a competitor publishes newer data, the citation disappears. When your content is in GPT-6’s training data, it’s baked into the model’s neural network. The AI “learned” from your content and that knowledge persists for 18-24 months until the next major model version, regardless of whether the original content still exists online.

Which platforms have AI training data licensing deals?

Reddit ($60M/year with Google), Stack Overflow (OpenAI), Associated Press (OpenAI), News Corp/WSJ (OpenAI), Financial Times (OpenAI), LinkedIn (Microsoft/OpenAI access), and several other major publishers.

As of Q4 2025, confirmed deals include: Reddit-Google ($60M+/year announced February 2024, ongoing), Stack Overflow-OpenAI (May 2024), Associated Press-OpenAI, News Corp-OpenAI (WSJ, MarketWatch, Barron’s – May 2024), Financial Times-OpenAI (Q2 2024), Axel Springer-OpenAI (Politico, Business Insider). Content on these platforms has significantly higher training data selection probability (40-65%) than content only on personal websites (5-8%).

Do I get credit when my content is used for training?

No – training data is typically not attributed in model responses. But you still benefit significantly.

When your content becomes part of training data, the AI learns from it but doesn’t cite you when answering questions. However, benefits include: (1) your insights shape how the AI fundamentally understands topics, (2) you create a competitive moat that can’t be displaced until next model version, (3) you’re more likely to get real-time citations for related queries since the model “knows” your topic area, (4) your methodology and approach can influence how the model explains concepts.

How do AI companies select what to train on?

Through licensing deals, quality filters, authority signals, information density analysis, and engagement metrics.

Selection hierarchy: (1) Licensed partnership data gets priority (Reddit, AP, Stack Overflow, major news outlets – 80-95% selection rate), (2) High-authority public web (Wikipedia, .edu, .gov, major publications – 15-30% selection), (3) General web crawl with heavy quality filtering (only 3-7% makes it through), (4) Explicitly blocked content is excluded (paywalls, robots.txt blocks, login walls). Quality signals include: entity recognition in Knowledge Graph, citation count from other authoritative sources, engagement metrics (time on page, shares, comments), information density (unique data points per word), content stability (available 6+ months consistently).

What content format is best for training data selection?

Fact-dense, structured content with 15-20 unique data points per 1,000 words, clear definitions under 300 characters, step-by-step methodologies, and original research.

High-value formats: (1) Canonical definitions and explanations with explicit comparisons, (2) Data-rich analysis with specific numbers, percentages, and examples, (3) Procedural knowledge and methodologies with clear steps, (4) Historical analysis and longitudinal data, (5) Comparison guides with tables and structured data. Low-value formats that get filtered: duplicate content, promotional material, opinion without supporting data, thin content under 300 words, vague claims without specifics, broken or incomplete content.

When is the training data selection window for GPT-6?

Q4 2025 through Q2 2026 – we’re in the selection window right now.

GPT-5 launched in Q2 2025 and was trained on data selected through early 2025. GPT-6 is expected to launch in Q3-Q4 2026, with training data selection happening approximately 6-12 months before launch. This means content published between Q4 2025 and Q2 2026 has the highest probability of inclusion in GPT-6’s training dataset. Content published after Q2 2026 will likely miss the cutoff and need to wait for GPT-7 (expected 2027-2028).

The ROI Reality Check: Should YOU Actually Do This?

Let’s be honest: optimizing for AI training data is a 3-5 year play with unclear ROI.

If you’re a solo consultant, small agency, or local business, your time is better spent on:

- GEO optimization (get cited by current AI models NOW – drives actual traffic)

- Entity SEO (build authority Google AND AI recognize)

- Platform-specific SEO (Reddit, LinkedIn, YouTube – where your clients actually are)

- Traditional Google SEO (still the #1 traffic source for most businesses)

Training data optimization makes sense if:

- ✅ You’re a large publisher/platform with AI licensing deals

- ✅ You’re playing a 5+ year brand authority game

- ✅ You have resources to execute multi-platform strategy at scale

- ✅ You’re already dominating GEO and traditional SEO

Skip training data optimization if:

- ❌ You need leads/traffic in the next 12 months

- ❌ You’re a solo consultant or small team

- ❌ You haven’t mastered GEO and Entity SEO yet

- ❌ You can’t commit to publishing on Reddit/LinkedIn/Medium consistently

My advice? Master the fundamentals first:

- Information Gain – create content AI literally can’t replicate

- GEO – get cited by AI models TODAY

- Entity SEO – build authority that lasts

- Search Everywhere – show up where your audience actually searches

THEN, if you’ve got those dialed in and want to play the long game, consider training data.

But don’t skip the fundamentals chasing the shiny new strategy.

The Bottom Line: Train the Teachers

Here’s what most SEOs still don’t understand in early 2026:

The next 6 months will determine what AI “knows” for the next 2-3 years.

GPT-6, Claude 5, and Gemini 3.0 are being trained RIGHT NOW on data selected between Q4 2025 and Q2 2026. Those models will launch in late 2026 or early 2027 and will serve billions of queries until the next major versions in 2028.

If your content makes it into their training data, you’re locking in 24-36 months of foundational influence over how AI understands your topic.

If it doesn’t, you’re stuck fighting for citations in an increasingly crowded retrieval layer while your competitors who made it into training data have their insights baked into the foundation.

The winners in 2027-2028 will be the ones who understood this in late 2025.

So here’s what to do right now, today:

- Audit your best content: What do you know that AI should learn from you? What proprietary data, methodologies, or insights do you have that don’t exist anywhere else online?

- Reformat for training optimization: Add information density (15-20 unique data points per 1,000 words), create clear structures (definitions, methodologies, step-by-step processes), include tables and comparative data.

- Multi-platform publish strategically: Reddit first (licensed platform), LinkedIn second (Microsoft access), Medium third (high authority), your website fourth (canonical source with full schema).

- Document your methodology: Frameworks, procedures, and decision-making processes are training gold because they teach AI how to think, not just what to know.

And remember: before you optimize for training data, make sure you have something worth training on.

That starts with Information Gain – unique insights that literally don’t exist anywhere else online. Structure it with GEO formatting so it’s easily retrievable for citations. Distribute it across all platforms where AI companies are actively licensing content. Build it on a foundation of entity authority so AI companies recognize you as a trusted source.

The algorithm doesn’t just cite sources anymore. It learns from them.

The question isn’t whether AI will know your topic area. The question is: will AI learn it from you or from your competitors?

Are you teaching the next generation of AI? Or are you letting someone else train it while you fight for scraps in the citation layer?

The training window is open. It won’t be for long.